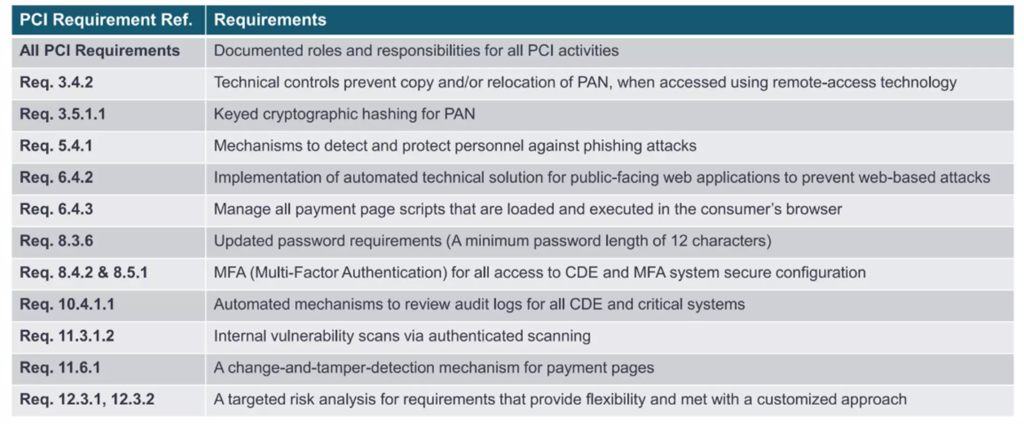

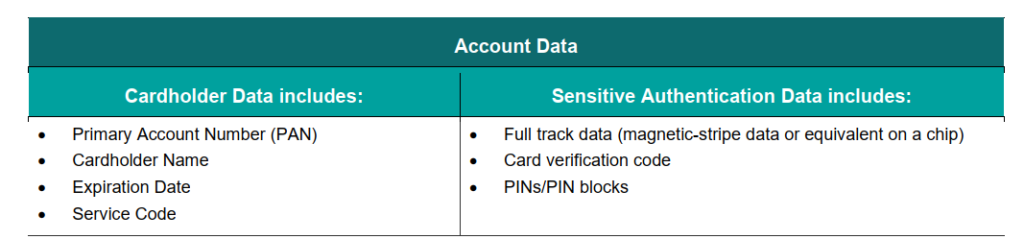

As we delve into the intricacies of the PCI-DSS v4.0 standard, it’s crucial to understand the significance of each requirement and its impact on safeguarding sensitive cardholder data. For this article, we’ll be focusing on Requirement 3.5.1.1, which revolves around the use of keyed cryptographic hashing for protecting Primary Account Numbers (PANs).

Requirement 3.5.1.1 states that “Hashes used to render PAN unreadable (per the first bullet of Requirement 3.5.1) are keyed cryptographic hashes of the entire PAN, with associated key-management processes and procedures in accordance with Requirements 3.6 and 3.7.”

Firstly, like everything else from interpreting history to interpreting your wife’s nuanced tone when she says, “It’s fine.”, everything needs a little contextualization. Luckily for us, PCI has placed some explanation for us to jumpstart the discussion.

A hashing function that incorporates a randomly generated secret key to provide brute force attack resistance and secret authentication integrity.

Definition of Keyed Cryptographic Hash, PCI Glossary

Appropriate keyed cryptographic hashing algorithms include but are not limited to: HMAC, CMAC, and GMAC, with an effective cryptographic strength of at least 128-bits (NIST SP 800-131Ar2).

Refer to the following for more information about HMAC, CMAC, and GMAC, respectively: NIST SP 800-107r1, NIST SP 800-38B, and NIST SP 800-38D).

See NIST SP 800-107 (Revision 1): Recommendation for Applications Using Approved Hash Algorithms §5.3.

So that’s a lot of MACs.

Let’s break it down. The requirements has 3 areas of importance, which we have helpfully underlined.

Keyed Cryptographic Hashes

The requirement mandates the use of keyed cryptographic hashes to render PANs unreadable. A keyed hash basically takes the entire PAN and combines it with a secret key to produce a unique, fixed-size output that is practically impossible to reverse-engineer without knowing the key. This way, even if an attacker gains access to the hashed PAN, they won’t be able to derive the original PAN without the secret key.

In 3.2.1, this was not stated and therefore the assumption that a simple hash was sufficient. Let’s listen to what this old obsolete standard says: “It is recommended, but not currently a requirement, that an additional, random input value be added to the cardholder data prior to hashing to reduce the feasibility of an attacker comparing the data against (and deriving the PAN from) tables of precomputed hash values.”

That aged like milk. Basically they are talking about salt. The goal of salting is to protect against dictionary attacks or attacks using a rainbow table. If no secret salt is used, or if the salt has not been sufficiently protected, the corresponding data, for example the PAN, can be read from the attacker’s previously calculated dictionaries or rainbow tables. So in short, salts are good for the world. Except for Salt Bae. He’s no good.

Salting creates slow hashing, which is the point. So that it takes a few billion years for brute force to be successful. How different is salting from keyed hashes? For one, salts are generally known. Sometimes they are even stored together with the hash in the database. So if let’s say, that’s compromised, Salt is known. I suppose, you can say “Live by the salt, die by the salt.” Ha!

Keyed Crypto Hashes mean there is a secret key. And before you go and jump off the building, there are already existing algorithms out there (The MAC brothers) that has previously been used — primarily, to my knowledge — for message integrity checks. In fact, the MAC here means Message Authentication Code to check integrity and authenticity. Unlike the salt, it ISN’T known, or at least not unprotected. So even if the database is compromised, they can’t get the key, because it’s protected (through encryption, later on to explain).

Now, why the change from normal hash, with recommended Salt, to hash with secret key?

The problem is with card numbers. Those dang card numbers, which is so different from let’s say passwords. Unlike passwords, where it could really be random, credit card numbers are NOT random. They are unique, but they are far from random. You see, a credit card consist of:

- The bank identification number (BIN) or issuer identification number (IIN): The first six digits is the issuer id. You can go https://www.bindb.com/bin-list

- The account number: The number between the BIN and the check digit (the last digit) is six to nine digits long and is used to identify the individual account number.

- The check digit: The last digit, added to validate the authenticity of the credit card number. This is by using the Luhn algorithm.

The thing about Luhn is that it is used to validate primary account numbers. I am not going into details, as other people have done so and will do a much better job in explaining this. But the short of it is that, if I have the BIN, and I have the Luhn and I have, let’s say 3 more numbers of the account number, then you get the picture. The Luhn digit is the result of the luhn algorithm applying to all the previous numbers (right first to left), which you already known, if it’s truncated! You would already know 9 digits (first six, last three, the last being the final luhn result). It’s likely still going to take a lot of effort, but the predictable way credit cards are structured actually provides less fields to be guessed. As scary as it may sound, hashes can be possibly reversed. Well, not ‘reversed’ per se but ‘reconstructed’ through guesswork and bruteforce.

While salt adds complexity and make it slower, salts aren’t secret, remember, so eventually that can still be broken. A Key however is secret. Remember the data encryption key, key encryption keys? Well, hashing now requires the same treatment as encryption in that sense that these keys need to be encrypted.

The other important bit of this requirement is the requirement emphasizes that the entire PAN must be hashed. This is important because hashing only a portion of the PAN would still leave some sensitive information exposed. By hashing the entire PAN, we ensure that no part of it remains in plain text, adding an extra layer of protection.

Lastly, the requirement stresses the importance of proper key management processes and procedures, as outlined in Requirements 3.6 and 3.7. This means that the secret keys used for hashing must be securely generated, stored, and managed throughout their lifecycle. Weak key management can undermine the entire purpose of keyed hashing, so it’s crucial to get this right.

What does this mean?

It means, like a lot of new requirements in v4.0: more work.

It is, in its heart, a concept of defense-in-depth. Requirement 3.5.1.1 serves as a secondary line of defense against unauthorized access to stored PANs. Even if an attacker manages to exploit a vulnerability or misconfiguration in an entity’s primary access control system and accesses the database, the keyed cryptographic hashing of PANs acts as an additional barrier, preventing the attacker from obtaining the actual PANs, unless they manage to compromise the key.

By implementing a secondary, independent control system for managing cryptographic keys and decryption processes, entities can ensure that a failure in the primary access control system doesn’t automatically lead to a breach of PAN confidentiality. For instance, instead of storing the PANs in plain text, a website employs a keyed hashing algorithm, such as HMAC-SHA256, to render the PANs unreadable. Each PAN is combined with a unique, randomly generated secret key before being hashed, and the resulting hash values are stored in the website’s database.

Final note: It’s important to note that Requirement 3.5.1.1 applies to all instances of stored PANs, whether in primary storage (databases, flat files) or non-primary storage (backups, audit logs, exception logs). This means that entities must ensure that keyed cryptographic hashing is implemented consistently across all storage locations, leaving no room for gaps in protection.

However, the requirement does make an exception for temporary files containing cleartext PANs during the encryption and decryption process. This is a practical consideration, as it allows entities to temporarily work with unencrypted PANs while performing necessary operations, as long as the temporary files are properly secured and promptly removed after use.

If you have any questions or need assistance in navigating the complexities of PCI-DSS v4.0, don’t hesitate to reach out to us at avantedge@pkfmalaysia.com. Our team of experienced professionals is here to help you every step of the way, ensuring that your organization stays secure, compliant, and ahead of the curve in the ever-evolving landscape of data security.