Taking a bit of break from PCI articles, I want to touch a little bit on SOC or what we know as Service Organization Controls. Well, its actually now called System and Organization Controls but its really just a habit that’s hard to break as I keep calling it by its old name.

We’re getting asked more and more about SOC and I think it’s probably a good time to have a detailed series on this particular set of engagements. This one gets a menu of selections and although not as crazy as PCI-DSS, which resembles the local mixed rice shop with all its choices, SOC does come in a fair bit of flavor that you need to get used to.

Firstly, lets talk history.

Usually when discussing about SOC, we usually will go back to the 1990s where SAS (Statement of Auditing Standards) 70 was born. Perhaps we need to go a bit further back in time, just for nerd values.

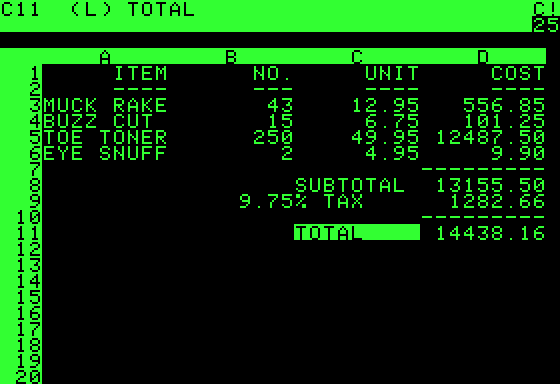

The history of SOC Attestation begins in the 1970s with the rise of computer-based accounting systems. Yes, 1970s. Here is the VisiCalc, the great grand father of Excel. Absolutely adore the fonts and colors. I wish computer graphics were like this forever.

So for useless information, I always wondered if there was a SAS 70, there has to be an SAS 1. And it turned out there was! Back in 1972, but it did not really mention much about computers as back then, everything was likely still running on steam engines and horses with buggies. Oh wait, no that’s 1872. But still 1972 in computer terms would be considered as the stoneage. The immortal words of SAS 1 birthed the requirements we have today:

Since the definition and related basic concepts of accounting control are expressed in terms of objectives, they are independent of the method of data processing used; consequently, they apply equally to manual, mechanical, and electronic data processing systems. However, the organization and procedures required to accomplish those objectives may be influenced by the method of data processing used.

Section 320.33 of SAS No. 1

So back in the days, it wasn’t cool to say computers. You strut around campus with a cigar talking about Electronic Data Processing. That’s equivalent today to people discussing Quantum Physics.

As it turned out, the mention of controls for EDP (which is what SOC is all about) was spelled out even further in SAS 3, published in December 1974.

Because the method of data processing used may influence the organization and procedures employed by an entity to accomplish the objectives of accounting control, it may also influence the procedures employed by an auditor in his study and evaluation of accounting control to determine the nature, timing, and extent of audit procedures to be applied in his examination of financial statement.

SAS 3

In fact, SAS 3 is a fascinating bed time read that’s probably the first document to actually list down standards of auditing and controls that we still use today. Segregation of Functions. Execution and recording of transactions. Access to Assets. Reconciliation. Review of Systems. Testing of Compliance. Program change management.

In SAS 3, there is also a statement that will be borne throughout its successors and posterity:

The auditor may conclude that there are weaknesses in accounting control procedures in the EDP portions of the application or applications sufficient to preclude his reliance on such procedures. In that event, he would discontinue his review of those EDP accounting control procedures and forgo performing compliance tests related to those procedures; he would not be able to rely on those EDP accounting control procedures.

SAS 3 Section 26.b

This word, “rely” or “reliant” comes up quite a lot even today and we will be exploring it throughout this SOC series.

But we do need to move on, as much as I love digging up technology fossils, not everyone appreciate an occasional nerd out like we do. Right…so as businesses increasingly relied on these systems, there grew a need to ensure their reliability and security. This led to the development of early computer auditing standards. Back in those days, it wasn’t just the accountants getting a bit huffy over EDPs and what not, the U.S. National Bureau of Standards decided that AICPA shouldn’t be the only one weighing on these EDPs and they issued Publication 38 called “Guidelines for Documentation of Computer Programs and Automated Data Systems.”, an absolute page turner, written with beautiful typewriter font and printed in a gorgeously time-yellowed pages.

Focusing back on the venerable SAS standards, other publications – 48, 55, 60 in the 1980s threw their names into the hat to address the need to control service organizations. They talk and dabble about information technology and controls, communications, internal audit and all that, but they never really fit the bill until the greatest of all arrive: SAS 70, in 1992.

Listen, when I started out the consulting business in 2010, there were still residues of SAS 70 being talked about. This is how omnipotent this standard is when it came to stamping its mark in standards landscape. It’s worth noting that alongside these auditing standards, the information security field was developing its own frameworks and best practices during this period and we can talk about PCI, about ISO, about Webtrust etc another time. However, SAS 70 was particularly significant in bridging the gap between traditional financial auditing and the assessment of IT controls in service organizations. It was like a magical bridge between the number guys dressed in suits in windowless offices and the guy in T-shirt with beard sitting at the basement in front of his Unix.

Aside from its now famous use of the word “Service Organizations”, SAS70 provided guidance for auditing the controls of a service organization. It defined two types of reports:

Type I: Described the service organization’s controls at a specific point in time. Type II: Included the description of controls and tested their operating effectiveness over a minimum six-month period.

So essentially even back then there is already a requirement for a type II to be six months at minimum. In their words “Testing should be applied to controls in effect throughout the period covered by the report. To be useful to user auditors, the report should ordinarily cover a minimum reporting period of six months.”

The reason why SAS70 is now more well known than the rest of its predecessors or successors was because of:

Standardization: It provided a uniform approach to assessing and reporting on controls at service organizations. A lot of its sections are still being used today as guideposts for standards and auditing.

Third-party assurance: It allowed service organizations to provide assurance to their clients about their control environment through a single report, reducing redundant audits.

Relevance to IT services: As outsourcing and cloud services grew, SAS 70 became crucial for evaluating IT service providers.

Broad adoption: It was widely recognized across industries and even internationally.

SAS70 was the staple for auditing service organizations for many years after that, until like all good things, it came to an end. It’s younger, more hip replacement, called the SSAE 16 (Standards for Attestation Engagements) came in 2011. This was where the introduction of SOC1, SOC2 was done. We call it “Report Category” or “Report Kind” because we can’t call it report type because “Type” was already used, where Type 1 and Type 2 denoted the period of time and effectiveness, vs design. Like all good accountants, they simply stuck to the 1 and 2 to differentiate it, so we ended up with SOC1 Type I, SOC1 Type II, SOC2 Type I and SOC2 Type II. All very symmetrical, see.

SSAE 16 is the same as SOC 1 reports, which focused specifically on controls relevant to financial reporting. They call this “ICFR” – Internal controls for financial reporting. Two new report “kinds” also need to be noted:

- SOC 2: Addressing controls relevant to security, availability, processing integrity, confidentiality, and privacy. (ISAE 3000 instead of ISAE 3402 or AT Section 101 instead of SSAE16)

- SOC 3: A simplified version of SOC 2 for general public use.

Let’s not get started with SSAE’s distant international twin called International Standard on Assurance Engagements (ISAE) 3402, which is actually used here in Malaysia. Now again, don’t get too confused because the SSAE or the ISAE are specifically SOC1 reports, not SOC2. SOC1.

All these doesn’t actually matter much because eventually, SSAE16 was succeeded by SSAE18 in 2017 and now in 2020, SSAE21. SO essentially, to simplify, SOC1 or SOC2 reports are simply just another way of saying these are reports based on the SSAE or ISAE standards. So technically, if you want to be really pedantic, you can throw a fit when people say, I want to ‘certify’ to ‘SOC standards’! Because there is no such thing as a SOC standard and it’s not ‘certification’, it’s ‘attestation’!

But that’s another story for another article as I’ve hit my word count and surpassed it. If you want to know more about how we service our customers in SOC and don’t mind a bit of history, let us know at avantedge@pkfmalaysia.com. We’ll definitely get back to you!