If you have been doing PCI-DSS for some years, you have probably come across this term called FIM (File Integrity Montioring), which sometimes absolutely befuddles our customers. They generally think this is part of a wider SIEM or SOAR solution but not necessarily so. We’ll explore a little on why FIM is important, how it impacts PCI-DSS, some examples on configuration and what alternatives are there (if any). Here we go!

File Integrity Monitoring is the process of validating the integrity of operating system and application software files. It ensures that files have not been altered or compromised, whether maliciously or accidentally.

- Detecting Unauthorized Changes: FIM helps in detecting unauthorized changes to critical system files, configurations, and content files. These changes could be indicative of a breach, malware infection, or insider threat.

- Compliance Requirements: Many regulatory standards, such as PCI-DSS, HIPAA, and SOX, require FIM as part of their compliance criteria. It ensures that sensitive data is protected and that the integrity of the system is maintained.

- Preventing Data Breaches: By monitoring file changes, FIM can provide early warning signs of a potential data breach. It allows organizations to take proactive measures to prevent unauthorized access to sensitive information.

- Enhancing Forensic Analysis: FIM provides detailed logs of file changes, aiding in forensic analysis. It helps in understanding the nature of an attack, the affected files, and the potential impact.

Let’s pause for now and see if common Antivirus/antimalware can take over this compliance requirement without deploying a specific FIM. Why? Because all companies generally have some sort of anti-virus running in their systems and all companies are stingy in their compliance spending, so part of our job is to see if current technology can be sufficient to address compliance requirements. The difference between Anti virus and FIM boils down to the reason of their existence, their meaning to life and everything. Its 42!

While FIM focuses on monitoring the integrity of files, antivirus and antimalware solutions are designed to detect and remove malicious software.

- Antivirus: Primarily targets known viruses and relies on signature-based detection. It may not detect unauthorized changes to files unless they are associated with a known virus signature.

- Antimalware: Broader in scope, antimalware solutions target various malicious software, including viruses, spyware, and ransomware. Like antivirus, it may not detect subtle unauthorized file changes.

FIM complements these solutions by providing an additional layer of security, focusing on the integrity of files rather than just malicious content.

FIM also differs from Security Information and Event Management (SIEM) and Security Orchestration, Automation, and Response (SOAR) solutions. That being said, its common that these systems are bundled along with FIM solutions so while it’s possible that SIEM may have FIM, it might not be true that FIM has SIEM. They are like, maybe a dysfunctional family who sometimes get together over Chinese New Year reunions.

- SIEM: SIEM solutions collect and analyze log data from various sources to provide real-time analysis of security alerts. While SIEM can include FIM as a component, it encompasses a broader range of security monitoring functions.

- SOAR: SOAR solutions focus on automating and orchestrating security operations. They help in coordinating various security tools and processes. Unlike FIM, which is more focused on file integrity, SOAR aims to streamline security operations and response.

FIM makes its appearance in PCI-DSS v4.0 in requirement 10, specifically 10.2, 10.3,10.4,10.5,10.7 and further on in 11.5, 12.10 and A3.5.1.

In 10.2, PCI basically wants FIM to be part of the logging requirements in terms of what to capture, retention, response and so on. Make sure your FIM is configured to monitor the critical files, and the details of the FIM logs has user and process details, who is responsible for the change event and captured in real time. Ensure alerts are generated for change events by privileged accounts which can be further correlated to create an automated incident. Also, make sure changes to log file security settings or removal of log files triggers real time alerts, with exhaustive event details. All creation and deletion activities are captured as well, and all event details must be as per 10.2.2 for the FIM log files.

10.3.4 makes specific mention of FIM but there is some confusion to this requirement ” File integrity monitoring or change-detection mechanisms is used on audit logs to ensure that existing log data cannot be changed without generating alerts. “. Obviously if you try to monitor for changes in a log file and alert everytime that file is changed, your SIEM or SOAR will light up like Christmas. Because of the nature of log files, it is supposed to change! So to avoid the noise, ensure log files are monitored for changes in security settings, like permissions or ownership. If a log file is deleted, that is also an anomaly. And for those logs that are archived or digitally signed, if any changes are made to these, then your FIM should be able to detect it.

Requirement 11 doesn’t change much for V4.0 — it is the main portion for FIM in 11.5.2 and it remains pretty much the same. Requirement 12.10.5 does provide an explicit requirement to include FIM alerts into incident management and response. But you know that already, right?

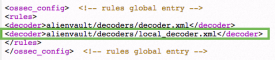

There are plenty of FIM solutions out there. The common ones we see is OSSEC which is deployed together with Alienvault previously. Tripwire is also a well known name in the FIM arena. If you want to explore the inbuilt Linux version of FIM, auditd might be worth your time. For those unfamiliar with auditd, it’s a component that provides auditing functionality for the Linux kernel. It’s widely used for security monitoring, system troubleshooting, and compliance reporting. Configuring auditd might be intimidating to some at first, but here’s some rules to get you started, found in this link

https://github.com/linux-audit/audit-userspace/blob/master/rules/30-pci-dss-v31.rules

In summary, it covers the following areas (config has been omitted in this article, you can go to the site to get the details)

- User Access Linking (10.1): Implicitly met by the audit system.

- User Access to Cardholder Data (10.2.1): Requires a watch on the database, excluding daemon access. (Path to the database must be specified.)

- Logging Administrative Actions (10.2.2): Enable tty logging for

suandsudo. Special cases forsystemd-runandpkexecare included. - Monitoring Privilege Escalation Configuration (10.2.2): Watches changes to

/etc/sudoersand/etc/sudoers.d/. - Access to Audit Trails (10.2.3): Monitors access to

/var/log/audit/and specific audit commands. - Invalid Logical Access Attempts (10.2.4): Naturally met by PAM.

- Logging of Identification & Authentication (I&A) Mechanisms (10.2.5.a): Handled by PAM.

- Logging of Privilege Elevation (10.2.5.b): Monitors specific syscalls related to privilege elevation.

- Logging Account Changes (10.2.5.c): Watches changes to account-related files like

/etc/group,/etc/passwd, etc. - Time Data Protection (10.4.2b): Places rules to check time synchronization.

- Securing Audit Trails (10.5): Includes various measures to protect audit logs, limit viewing, prevent unauthorized modifications, back up files, and monitor log modifications.

So, there you go. Lastly, though since PCI v4.0 came out, the council seem to have made distinction of change detection mechanisms vs File integrity monitoring, stating that FIM is part of CDM, sort of like a subset. I suppose this gives a little more leeway for companies to implement other types of CDM other than FIM, although FIM is probably the only one that can address all the above requirements comprehensively and without any need for compensating controls. But just for some ideas, the below may be a list of other CDMs that can possibly address the FIM functionalities in part, automated or manual:

- Version Control Systems: These systems track changes to files and code within a development environment. They allow developers to see what was changed, who changed it, and why. Tools like Git, Subversion, and Mercurial are examples of version control systems that provide change detection.

- Database Monitoring Tools: These tools monitor changes to database schemas, configurations, and content. They can alert administrators to unauthorized alterations, additions, or deletions within the database. Tools like Redgate SQL Monitor or Oracle Audit Vault are examples.

- Configuration Management Tools: Configuration management tools like Ansible, Puppet, and Chef can detect changes in system configurations. They ensure that systems are consistently configured according to predefined policies and can alert administrators to unauthorized changes.

- Network Anomaly Detection Systems: These systems monitor network behavior and alert to changes that may indicate a security threat. They can detect changes in traffic patterns, unusual login attempts, or alterations to network configurations.

- Endpoint Detection and Response (EDR) Solutions: EDR solutions monitor endpoints for signs of malicious activities and changes. They can detect changes in system behavior, file activities, and registry settings, providing a broader view of potential security incidents.

- Log Monitoring and Analysis Tools: Tools like Splunk or LogRhythm analyze log files from various sources to detect changes in system behavior, user activities, or security settings. They can provide real-time alerts for suspicious changes.

- Digital Signature Verification: Some systems use digital signatures to verify the integrity of files and data. Any alteration to the digitally signed content would cause a verification failure, alerting to a potential unauthorized change.

- Cloud Security Tools: With the rise of cloud computing, tools like AWS Config or Azure Security Center provide change detection for cloud resources. They monitor configurations, permissions, and activities within the cloud environment.

Again, we would highly recommend that a FIM be used, but in the case where it is not possible in that environment, for instance Cloud environment, then other CDMs can be possible. If you need to know more about FIM and PCI or any compliance in general, drop us a note at pcidss@pkfmalaysia.com and we will get back to you immediately!